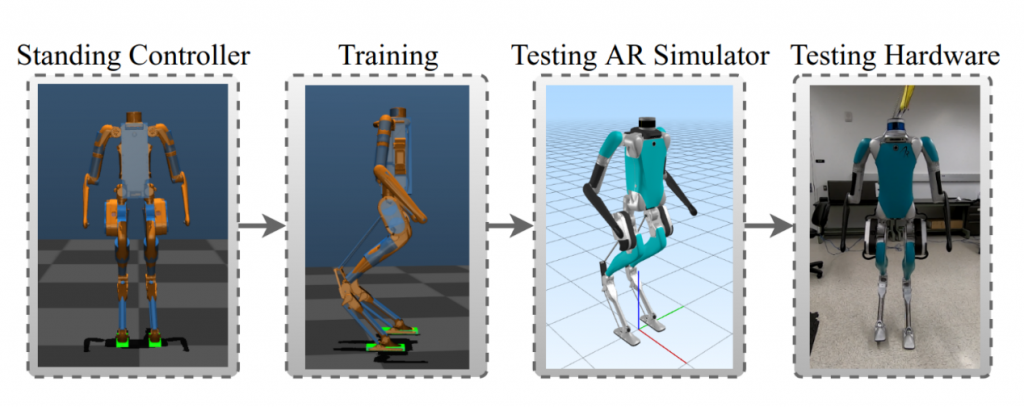

Dynamic locomotion for humanoids is perhaps one of the most challenging problems in the legged and even the general robotics literature. Recently, reinforcement learning techniques have been attracting a considerable amount of research attentions. In this paper, we investigate humanoid locomotion problem using reinforcement learning and develops a hierarchical and robust reinforcement learning framework. To the best of our knowledge, this is the first time a learning-based policy is transferred successfully to the Digit robot (the 3D biped robot Digit built by Agility Robotics) in hardware experiments without using dynamic randomization or curriculum learning.

We propose a cascade-structure controller that combines the learning process with intuitive feedback regulations. This design allows the framework to realize robust and stable walking with a reduced-dimension state and action spaces of the policy, significantly simplifying the design and reducing the sampling efficiency of the learning method. The inclusion of feedback regulation into the framework improves the robustness of the learned walking gait and ensures the success of the sim-to-real transfer of the proposed controller with minimal tuning.

We specifically present a learning pipeline that considers hardware-feasible initial poses of the robot within the learning process to ensure the initial state of the learning is replicated as close as possible to the initial state of the robot in hardware experiments. Finally, we demonstrate the feasibility of our method by successfully transferring the learned policy in simulation to the Digit robot hardware, realizing sustained walking gaits under external force disturbances and challenging terrains not included during the training process.

Preprint available at https://arxiv.org/abs/2103.15309

Video link: https://youtu.be/j8KbW-a9dbw